Introduction data enrichment based on email addresses and domain names

03.08.2020 - Jay M. Patel

Data enrichment is a general term to denote any processes which enhance and enrich your existing database with additional information that can be used to drive your business goals such as marketing, sales and lead generation, customer relations by targeted outreach, and preventing customer churn. Plainly speaking, all you are doing is going out to a third-party data vendor and fetching additional data based on some common key. One of the most keys for data enrichment is searching based on an email address or company’s domain address.

Read more… (~4 Min.)How to do full text searching in Python using Whoosh library

02.08.2020 - Jay M. Patel

Full text searching with fast indexing is essential to go through large quantities of text in Pandas dataframes to power your data science workflows. Traditionally, we can use a full text search engine databases like Elasticsearch, Apache Solr or Amazon cloudsearch for this; however, it’s pretty impractical to do that for one off requirements or when working with only few GBs of data. In that case, you can simply load your text into Pandas dataframe, and use regex to perform the search as shown.

Read more… (~3 Min.)How to create pdf documents in python using FPDF library

01.08.2020 - Jay M. Patel

PDF files are ubiquitous in our daily life and it’s a good idea to spend few minutes to learn how to programmatically generate it in Python. In this tutorial, we will take structured data in JSON and convert that into a pdf. Ideally, pdfs contain text paragraphs, hyperlinks and images, so we will work with a dataset containing all of that for better real world experience. Firstly, let us fetch the data from a publicly available API containing scraped news articles from past 24 hours.

Read more… (~3 Min.)Introduction to machine learning metrics

01.01.2020 - Jay M. Patel

Machine learning (ML) is a field of computer science that gives computer systems the ability to progressively improve performance on a specific task aka learn with data without being explicitly programmed. Taking a 50,000 ft view, we want to model a given dataset either to make predictions or we want a model to describe a given dataset to gain valuable insights. There are three general areas of machine learning models

Read more… (~6 Min.)Introduction to web scraping in python using Beautiful Soup

14.02.2019 - Jay M. Patel

The first step for any web scraping project is getting the webpage you want to parse. There are many python libraries such as urllib, urllib2, urllib3 for requesting pages via HTTP, however, none of them beat the elegance of requests library which we have been using in earlier posts on rest APIs and we will continue to use that here. Before we get into the workings of Beautiful Soup, let us first get a basic understanding of HTML structure, common tags and styling sheets.

Read more… (~5 Min.)Why is web scraping essential and who uses web scraping?

10.02.2019 - Jay M. Patel

Web scraping, also called web harvesting, web data extraction, or even web data mining is defined as a software program or code designed to automate the downloading and parsing of the data from the web. Nowadays many websites such as Twitter, Facebook etc. provides REST based Application Programming Interface (APIs) to programmatically consume the structured data available on their websites and data obtained that way is usually not only “cleaner” but also easy and hassle-free compared to web scraping.

Read more… (~3 Min.)Natural language processing (NLP): word embeddings, words2vec, GloVe based text vectorization in python

08.02.2019 - Jay M. Patel

In the previous posts, we looked at count vectorization and term frequency-inverse document frequency (tf-idf) to convert a given text document into a vectors which can be used as features for text classification task such as classifying emails into spam or not spam. Major disadvantages of such an approach is that the vectors generated are sparse (mostly made of zeros), and very high-dimensional (same dimensionality as the number of words in the vocabulary).

Read more… (~8 Min.)Natural language processing (NLP): term frequency - inverse document frequency (Tf-idf) based vectorization in Python

07.02.2019 - Jay M. Patel

In the previous post, we looked at count vectorization to convert a given document into a sparse vectors which can be used as features for text classification task such as classifying emails into spam or not spam. Count vectorization has one major drawback: it gives equal weightage to all the words (or tokens) present in the corpus, and this makes it a poor representation for the semantic analysis of the sentence.

Read more… (~5 Min.)Natural language processing (NLP): text vectorization and bag of words approach

04.02.2019 - Jay M. Patel

Let us consider a simple task of classifying a given email into spam or not spam. As you might have already guessed, this is an example of a simple binary classification problem with target or label values being 0 or 1, within the larger field of supervised machine learning (ML). However, we still need to convert non-numerical text contained in emails into numerical features which can be fed into a ML algorithm of our choice.

Read more… (~4 Min.)Top data science interview questions and answers

02.02.2019 - Jay M. Patel

Machine learning Basics 1. What is machine learning? Machine learning (ML) is a field of computer science that gives computer systems the ability to progressively improve performance on a specific task aka learn with data without being explicitly programmed. Taking a 50,000 ft view, we want to model a given dataset either to make predictions or we want a model to describe a given dataset to gain valuable insights.

Read more… (~7 Min.)Using Twitter rest APIs in Python to search and download tweets in bulk

01.02.2019 - Jay M. Patel

Getting Twitter data Let’s use the Tweepy package in python instead of handling the Twitter API directly. The two things we will do with the package are, authorize ourselves to use the API and then use the cursor to access the twitter search APIs. Let’s go ahead and get our imports loaded. import tweepy import pandas as pd import matplotlib.pyplot as plt import seaborn as sns import numpy as np sns.

Read more… (~5 Min.)Introduction to natural language processing: rule based methods, name entity recognition (NER), and text classification

01.02.2019 - Jay M. Patel

The ability of computers to understand human languages us referred to as Natural Language Processing (NLP). This is a vast field and frequently practitioners include machine translation and natural language generation (NLG) as part of core NLP. However, in this section we will only look at NLP techniques which aim to extract insights from unstructured text. Regular expressions (Regex) and rule based methods Regular expressions (Regex) match patterns with sequences of characters and they are supported in wide variety of programming languages.

Read more… (~3 Min.)

Get started with Git and Github in under 10 Minutes

28.10.2018, last updated 20.02.2019 - Jay M. Patel

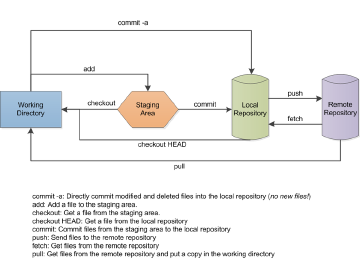

Git is probably the most popular version control system being used right now, and if you have any experience with other version control systems like subversion than it’s strongly recommended that you go through this section since lot of concepts from other systems don’t directly translate to Git. Git basics You can create a local Git repository on your computer by either: Clone an existing Git repository from a remote repository on internet (e.

Read more… (~6 Min.)