Natural language processing (NLP): term frequency - inverse document frequency (Tf-idf) based vectorization in Python

07.02.2019 - Jay M. Patel - Reading time ~5 Minutes

In the previous post, we looked at count vectorization to convert a given document into a sparse vectors which can be used as features for text classification task such as classifying emails into spam or not spam.

Count vectorization has one major drawback: it gives equal weightage to all the words (or tokens) present in the corpus, and this makes it a poor representation for the semantic analysis of the sentence. Let me describe what I mean here; let us consider first two sentences from Jane Austen’s classic novel Pride and Prejudice:

It is a truth universally acknowledged, that a single man in possession of a good fortune, must be in want of a wife. However little known the feelings or views of such a man may be on his first entering a neighbourhood, this truth is so well fixed in the minds of the surrounding families, that he is considered the rightful property of some one or other of their daughters.

There are certain words above such as “it”, “is”, “that”, “this” etc which don’t contribute much to the meaning of the underlying sentence and are actually quite common across all English documents, not just in Pride and Prejudice, and these are known as stop words.

In contrast, some words such as “single”, “man”, “want”, “wife” tells us the meaning of the sentence and as such these should be weighed more than the stop words if we want to capture how important a word is towards imparting semantic meaning of the sentence.

Term Frequency - Inverse Document Frequency (Tf-idf) based vectorization is one such method to assign different weightages to words based on their occurrence across the corpus.

Term frequency

Term Frequency (TF) is the ratio of number of times a word appears in a document compared to the total number of words in that document and it’s expressed below.

TF will be high for STOP words whereas it will be pretty low for rare words which impart meaning to a sentence.

Inverse document frequency

Inverse Document Frequency idf(w) of a given word w is defined as a log of total number of documents (N) divided by document frequency dft, which is the the number of documents in the collection containing the word w.

Term frequency - inverse document frequency (Tf-idf)

Term Frequency - Inverse Document Frequency tfidf(w) is simply a product of term frequency and inverse document frequency.

Term frequency - Inverse document frequency (Tf-idf) with Scikit-learn.

Let us take a block of text below from a random page on open learn.

text = '''As a psychologist and coach, I have long been interested in the power of stories to convey important lessons. Some of my earliest recollections were fables, stories involving animals as protagonists. Fables are an oral story-telling tradition typically involving animals, occasionally humans or forces of nature interacting with each other. The best-known fables are attributed to the ancient Greek storyteller Aesop, although there are fables found in almost all human societies and oral traditions making this an almost universal feature of human culture. A more modern example of an allegorical tale involving animals is George Orwell’s Animal Farm , in which animals rebel against their human masters only to recreate the oppressive system humans had subjected them to. Fables work by casting animals as characters with minds that are recognisably human. This superimposition of human minds and animals serves as a medium for transmitting and teaching moral and ethical values, in particular to children. It does this by drawing on the symbolic connection between nature and society and the dilemmas that this connection can be used to represent. Fables have survived into the modern age, although their role seems to have waned under the emergence of other storytelling media. Animals are, thus, still a feature of modern day stories (e.g. David Attenborough’s wildlife programmes, stories about animals in the news, and and CGI-heavy children’s cartoons involving cute animal characters). Our more immediate and visceral connection with nature and with the different type of animal species seems to have been reduced to being observers rather than participants. Wild animals in ancient times were revered as avatars of ancient gods but also viewed with fear. By and large, our modern position as observer in relation to animal stories is that we remotely view animals in peril from climate change on television and social media news coverage, but we may remain disconnected to the fundamental moral lessons their stories could teach us today.'''

Let us remove punctuations from the text using regex function.

import re

def preprocessor(text):

text = re.sub('<[^>]*>', '', text)

emoticons = re.findall('(?::|;|=)(?:-)?(?:\)|\(|D|P)',

text)

text = (re.sub('[\W]+', ' ', text.lower()) +

' '.join(emoticons).replace('-', ''))

return textAfter preprocessing, the text looks like shown below:

Figure 1: Text after applying preprocessing function

Now, this is ready to be fed into scikit learn’s tfidf vectorizer which will convert it into bag of words.

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.feature_extraction.text import TfidfTransformer

import numpy as np

import pandas as pd

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform([text])You can intuitively see the sparse tfidf vectors by converting it into a pandas dataframe.

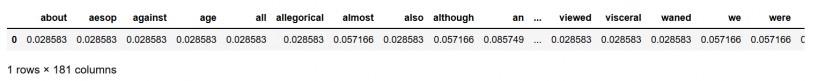

dtm = vectorizer.transform([text])

pd.DataFrame(dtm.toarray(), columns=vectorizer.get_feature_names())

Figure 2: pandas dataframe from tf-idf vectors

You can directly use the vectors created above for text classification; in the next post we will show how tf-idf vectors will lead to more accurate classification models compared to using count vectorization models from the previous post.